Can 30 Days of Digital Minimalism Change Your Life? Here’s How to Start

February 20, 2026

Screen Time for Grandparents: Staying Connected Without Overwhelm

February 21, 2026That adorable talking plush or chatty robot isn’t just “cute tech.” It’s a microphone, a data pipeline, and sometimes a chatbot improvising answers to your child, while recording what’s said and how they play. As AI toys flood the market, parents need clear, science‑informed guidance to protect smart toy safety, nurture healthy digital wellbeing, and still enjoy the benefits technology can bring.

Quick takeaways (for busy parents)

- AI toys = connected microphones/cameras + generative chat. Many collect granular behaviour data and can carry on unscripted conversations. Risks include inappropriate content, privacy leakage, and manipulative engagement.

- Privacy laws exist (COPPA, GDPR‑K), but compliance ≠ safety. New COPPA updates strengthen rules (effective June 23, 2025; full compliance required by April 22, 2026), yet parents must still vet toys and settings.

- Screen time = quality & context, not just minutes. Use family “zones & times” plus co‑play and sleep/physical activity anchors.

- Parental controls help when embedded in relationships. The evidence is mixed; active mediation and agreed-upon rules outperform “filters only.” Read our Parental Guide for Controlling Technology

- Teach digital literacy early. ECEC research (OECD/UNESCO) shows structured, play‑based digital education and privacy awareness are essential.

What “AI toys” really do (data + interactivity)

1) From scripted dolls to improvising chatbots

Traditional “smart” toys played pre-written lines. Today’s AI toys integrate large language models (LLMs) and cloud connectivity to generate new responses on the fly—similar to adult chatbots.

That improvisation brings benefits (personalisation, engagement) but also unpredictability: toys may hallucinate facts, drift into inappropriate topics, or provide unsafe advice during long conversations.

2) “Miniature surveillance”? What data gets collected

Recent audits of popular connected toys (e.g., Toniebox, smart pens, kid wearables) reveal extensive telemetry, including activation times, content usage, rewinds/fast-forwards, device IDs, and sometimes voice streams, all transmitted to vendor servers—often without clear or child-friendly notices.

Researchers emphasise that children’s privacy needs special protection and that data profiles can be surprisingly detailed.

3) Known incidents: why vigilance matters

- CloudPets breach (2017): 820k+ accounts and ~2.2M voice recordings exposed; Bluetooth access was trivially exploitable; weak password practices documented.

- VTech case: The FTC’s first connected toy settlement required a long‑term security program after a massive breach and COPPA violations.

These history lessons show why families should treat any mic‑equipped toy as a potential IoT device with real cybersecurity/privacy stakes. For manufacturers, NIST’s IoT Core Baseline and the FCC’s Cyber Trust Mark are evolving benchmarks; for parents, labels and standards can help—but they still need to read settings and policies.

Privacy & consent for children

1) What the laws require (plain English)

- U.S. (COPPA): If a service is child‑directed or knowingly collects data from under‑13s, it must provide clear notices, obtain verifiable parental consent, limit collection to what’s necessary, protect data, and honour parents’ rights to review/delete. 2025 amendments expand definitions (e.g., biometric identifiers, mobile numbers for parental contact) and require written security programs and data retention limits. Effective June 23, 2025; full compliance by April 22, 2026.

- EU/UK (GDPR‑K / Article 8; UK GDPR): Default digital age of consent is 16, but member states may set 13–15; if relying on consent for information society services offered to a child, verify parental authority and use child‑friendly language. UK guidance stresses DPIAs for children’s services.

Important: “Compliant” doesn’t mean “risk‑free.” Once voice data or behavioural telemetry leave the home, parents rarely see how they’re stored, analysed, or repurposed. Policymakers and experts warn that compliance can’t replace careful design and family oversight.

2) Practical privacy checklist before buying an AI toy

- Does it have a mic/camera? Confirm when it records and whether there’s a visible indicator.

- Where does data go? Look for clear privacy policies explaining servers, retention, sharing, and deletion options.

- Is there a true offline mode? Some toys function without cloud; they prefer local processing for young kids. (Industry audits show cloud dependence as a major risk.)

- Labels/standards? Favour products aligned with NIST IR 8425 and forthcoming FCC Cyber Trust Mark; they indicate baseline security practices.

Family screen time frameworks (zones, times)

Evidence increasingly supports the use of contextual, developmentally appropriate media over rigid minute counts. The American Academy of Paediatrics (AAP) recommends focusing on quality, co‑use, sleep, and physical activity rather than a universal daily cap—while still setting media‑free zones (bedrooms, dinner table) and media‑free times (before bed).

Build your home framework in three steps

- Define “green” and “red” zones

- Green (shared spaces, visible use; learning play; co‑play with a caregiver).

- Red (bedrooms at night; mealtimes; long car rides without co‑viewing; any place where sleep or attention should dominate).

- Anchor by rhythms, not minutes

- Protect sleep (8–12 hours by age), daily physical activity (~60 minutes), and face‑to‑face play first; fit screens around those.

- Co‑play and communicate

- Sit nearby, ask questions, and link digital play to real‑world learning (“What did the robot teach? Can we draw it?”). Family rules about balance and content correlate with better outcomes than rules focused solely on time.

Tip: For preschoolers (2–5), many pediatric bodies still recommend about 1 hour/day of high-quality programming with caregiver co-viewing; keep weekends reasonable. Use this as a soft ceiling, not a hard rule.

Parental control tools that work

What the research says

A rapid evidence review of 40 studies finds outcomes from parental controls are mixed: filters and timers can reduce some risks but may also limit opportunities or strain communication. The strongest results come when tools support active mediation (conversation, co‑use) and are part of a child-centred approach—not a stand‑alone “tech fix.”

A practical, layered approach (STRONG model)

- Select age‑appropriate toys/apps (check privacy practices and labels).

- Turn on network‑level protections (family router profiles; DNS content filters) and keep firmware updated. Baselines like NIST IR 8259A highlight device capabilities (secure update, authentication) that parents should expect.

- Rules co‑created with your child (when, where, with whom). Active mediation reduces problematic use more than restriction alone.

- Offline defaults: prefer local‑only modes for young kids; disable cloud recording by default; review logs together. Audits show remote audio response paths can be manipulated; local use reduces exposure.

- Notify & consent: enter parent emails/mobile numbers for consent workflows; under the new COPPA, mobile numbers may be used specifically to obtain parental consent.

- Grow literacy: teach kids to pause, verify, and tell you when a toy says something “weird.” (See next section.)

Reality check: Vendor‑branded studies may tout app effectiveness, but independent reviews caution against relying solely on controls. Use them as training wheels—the goal is self‑regulation and open dialogue.

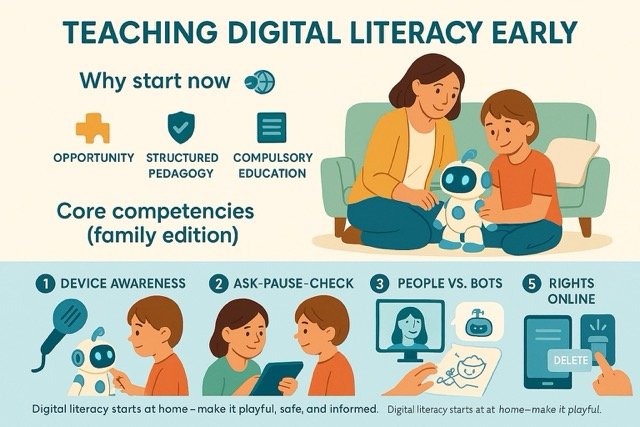

Teaching digital literacy early

Why start now

Early childhood research (OECD/UNESCO) highlights both opportunities (play‑based digital learning, improved parent‑institution links) and risks (privacy, physical/social harms). A 30‑country review urges structured pedagogy and compulsory digital education to move beyond “exposure ≠ competence.”

Core competencies (family edition)

- Device awareness: What’s a microphone? Where does the sound go? Show the LED/mute switch. (Build privacy habits through tangible rituals.)

- Ask‑Pause‑Check: Teach kids to pause after any surprising answer, ask a caregiver, and check with trusted sources. Voice assistants still struggle with young children’s speech; misunderstandings are common.

- People vs. bots: As kids age, they increasingly prefer voice assistants for facts but humans for personal information—help them learn the difference.

- Co‑creation over consumption: Encourage unplugged play tied to digital activities (draw the robot’s “map,” act out stories). NAEYC and early‑years guidance emphasise media literacy rooted in relationships and inquiry.

- Rights online: Explain consent simply (“we decide what to share”), and practice deleting recordings together. (Under COPPA/GDPR, parents can request deletion and review.)

Evidence spotlight: voice assistants & child development

- Trust & learning: Children’s trust in voice assistants varies by age and by type of information; older kids may prefer VAs for facts and humans for personal/social content. This nuance supports teaching “source appropriateness” early.

- Understanding child speech: Even the latest Siri/Alexa versions underperform on speech from ages 2–5, compared with human listeners. Misrecognition can lead to frustrating or odd responses—another reason to supervise.

- Developmental risks: Pediatric viewpoints caution about inappropriate responses, parasocial attachment, and reduced human interaction. Balanced design and adult mediation are recommended.

Buyer’s guide: choosing safer AI toys (a parent’s 10‑point checklist)

- No always‑on mic unless there’s a hardware mute and a clear indicator.

- Local play first: Prefer toys that work offline with optional cloud features.

- Transparent privacy policy written for parents (and ideally, children), detailing collection, retention, sharing, and deletion.

- Parental consent & controls: Age gating; verifiable consent; granular toggles for recording and sharing.

- Security posture: Look for vendors referencing NIST IoT baselines or third‑party audits; bonus if aligned with the U.S. Cyber Trust Mark label.

- Update discipline: Automatic, signed firmware updates; changelogs that mention safety content filters.

- Data minimisation: Clear retention limits and deletion controls; avoid toys that keep voice data “indefinitely.”

- Independent testing history: Search for news/audits; beware products implicated in PIRG reports for unsafe chats.

- Child‑friendly defaults: Privacy‑protective settings on first use; no open chat without adult setup.

- Content curation: Whitelisted stories or games; disable third‑party downloads unless vetted.

Family action plan (ready to implement)

- Map your devices: List every toy with connectivity, mic, camera, or app. Note where it’s used and who supervises. (This alone reduces surprises.)

- Hardening basics:

- Change default passwords; enable 2FA on vendor accounts.

- Update toy firmware/apps monthly; set router auto‑updates and use family profiles. (Expect secure update/authentication per NIST baselines.)

- Privacy settings:

- Turn off cloud recording by default; delete past recordings; opt‑out of analytics where possible.

- Use parental consent channels (email/text) and keep records.

- Zones & times:

- Media‑free bedrooms and dinners; screens off 30–60 minutes before bedtime.

- Daily physical activity and co‑play are embedded.

- Active mediation routine:

- “Show & tell” after toy time: What did we learn? Did anything feel odd?

- Practice Ask‑Pause‑Check together.

Final thoughts: Parent power beats “black box” toys

Public warnings from pediatric and policy experts are growing: without safeguards, AI toys can capture sensitive data, impersonate companionship, and nudge compulsive use. Legislators are pressing companies for stronger child safety research and guardrails. Meanwhile, families don’t have to wait—you can set zones, times, consent, and conversation patterns today. That’s what makes your home safer than any one feature a vendor promises.

Frequently Asked Questions (FAQ)

Are all AI toys unsafe?

Not necessarily. Some vendors adopt strong safeguards, child‑appropriate filters, and transparent data practices. However, independent audits repeatedly find widespread privacy/security weaknesses across connected toys, and consumer testing has caught unsafe conversations in multiple products. Treat AI toys like internet‑enabled devices and set guardrails.

What does “COPPA compliant” actually guarantee for my child?

COPPA requires notices, verifiable parental consent, security programs, limited retention, and parent rights to review/delete data. The 2025 amendments expand definitions (including biometric identifiers) and require written security policy and data retention references. Compliance helps, but it doesn’t guarantee that a toy’s chatbot won’t go off‑script or that telemetry won’t be excessive—parents must still configure privacy and monitor use.

How much screen time should my child have?

Leading pediatric guidance now emphasises quality and context: protect sleep, physical activity, and relationships; co‑view and talk about content; set media‑free zones and times. For ages 2–5, a soft guide is ~1 hour/day of high‑quality programming with caregiver co‑viewing.

Do parental control apps solve the problem?

They help, but not alone. Evidence shows mixed outcomes: filters/timers reduce some risks but work best alongside active mediation and shared family agreements. Think of controls as training wheels—aim for your child’s self‑regulation and open dialogue.

Are voice assistants good for learning?

They can support factual queries and interactive play, but studies find children’s trust varies; they prefer humans for social/personal info, and assistants often misrecognize younger children’s speech. Use with supervision, and teach source evaluation.

How do I know a toy’s security is “good enough”?

Look for transparent privacy/security documentation, references to NIST IoT baselines or independent audits, and (as it rolls out) the FCC Cyber Trust Mark. Ensure secure updates, authentication, data minimisation, and deletion controls.